Approved: Fortect

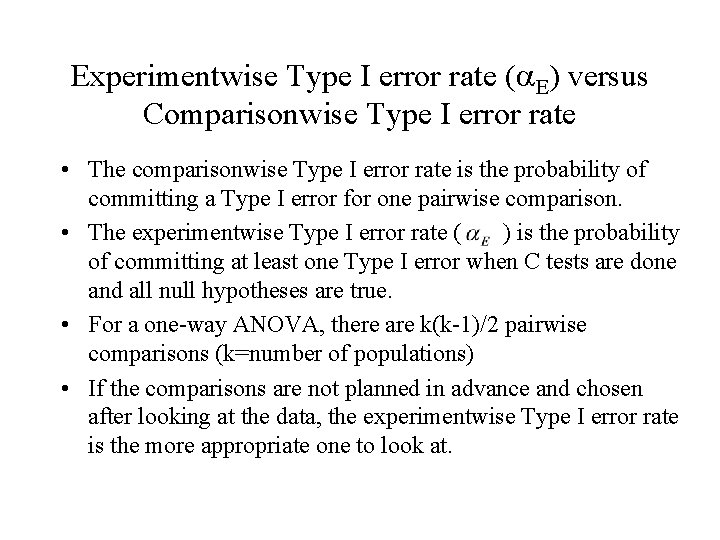

If your system has experimental error rates, this guide may help. g.the likelihood of committing a Type I error across the entire study in a test with different comparisons. The experimental error rate is completely different from the test error rate, which literally represents the likelihood of a Type I error occurring when performing a specific test, also known as a comparison.

On the final multiple comparison test, you will see the probability of making at least one Type I error in an uncut exploratory study. The experimental error fee is different from the test level error time, which is the probability that a Type I error in the voice range will result in a particular test or comparison.

g.

We would probably analyze two basic concepts. For example ANOVA by running two multiple-sample tests. For example, to decide whether to reject the use of null hypothesis or not

H 0 : μ 1 = 2 = 3

What is the Experimentwise error rate for the Tukey multiple comparisons?

Tewkis’ HSD method. With an experimental error rate of 5% and three treatments, we would weigh two different types of oil. 5 or more ∕ 2 = 2. 4 Conclusion 6. That is, STANDARD is very different from MULTI, but none of the other good comparisons make sense.

We can use three null hypotheses:

- H 0 : μ 1 = 2

- H 0 : 2 = μ 3

- H 0 : 1 = 3

If any of these null hypotheses is rejected, the malware’s null hypothesis will be rejected.

Note this, if you set Î ± =. 05 then is the global alpha value for almost three partial analyzes of 14, because 1 – – (1 Î ±) 3 = 1 – – (1.05) corresponds to 3 0.142525 Example 6). This means that the probability associated with rejecting the null hypothesis, even if it is (type I error), is typically 14.2525%.

For k groups, you must have m = COMBIN (k, 2) to proceed with these tests so that the final final alpha looks like 1 – (1 – Î ±) m , a great value е, which will get higher and higher as the number of samples increases. For example, if k = 6, then m = 30 and the probability of finding at least one significant t-test is purely random, perhaps even if the null hypothesis is true, usually exceeds 50%.

Indeed, one of the advantages of doing ANOVA instead of trying to split t-tests is to reduce the type I error. The only problem is that after doing ANOVA, if no assumptions are rejected, of course you want to be able to figure out which groups have variance that will then be unequal, so you will definitely run into a problem.

What is the Experimentwise error rate for the Tukey multiple comparisons?

Tewkis’ HSD method. With an experimental error rate of about 5% and three treatments, we now compare two types of oils with such different properties. 5 3 2 equals 2. 4 9 pair. In other words, STANDARD is significantly different from MULTI, but none of the additional comparisons make sense.

To get a combined error rate for Zone I (known as the experimental percentage error rate or family rate) of 0.05 in three separate laboratory tests, you must set each dog’s Alpha to a value such as a specific person – ( 1 – Î ±) 3 = 0.05, i.e. ± equals 1 – (1 – 0.05) 1 / 3 = 0.016952. As mentioned in Statistical Power, this reduces the electrical energy of individual t-tests for the mostThe same sample sizes. In the case when the error rate of the experiment is <. 05, this error rate is considered conservative. If this method is> 0.05, the error is considered large.

What is comparison wise error rate?

One of them currently is the comparative error rate, which is defined as the ratio of all Type I errors to the total number of comparisons. For illustration purposes only, if we have four treatments to compare, we need to do a 6 month time comparison.

There are two types of focus on testing after ANOVA: planned (also called a priori) and unplanned (also called a posteriori, or perhaps even ex post). Scheduled tests are defined before data collection, unscheduled tests after data collection. These tests have completely different type I error rates.

For example, suppose there are groups of documents. If an alpha with an increased value of 0.05 is used for a scheduled test, the null hypothesis

Approved: Fortect

Fortect is the world's most popular and effective PC repair tool. It is trusted by millions of people to keep their systems running fast, smooth, and error-free. With its simple user interface and powerful scanning engine, Fortect quickly finds and fixes a broad range of Windows problems - from system instability and security issues to memory management and performance bottlenecks.

If any of these null hypotheses are rejected, the original null hypothesis is rejected.

Note, however, that if you set Î ± = 0.05 for each associated with the three sub-analyzes, then the overall leader is the value. 14, since 1 – 1 Î ±) 3 = 1 – – – (1.05) 3 is 0.142525 (see Example 6 for defining probabilistic concepts based on ). This means that the probability of rejecting the null hypothesis, even if it is very correct (type I error), is 14.2525%.

For groups c, you needwould run such tests erina = COMBIN (k, 2), and as a result, the total alpha would be two (1 – – Î ±) m , a value that starts with increasing sample rate would get higher and above. For example, if agree = 6, then m = 15, and just like the probability of finding a t-test at least meaningful to a person purely by chance, even an exact null hypothesis is in excess of 50%.

What is comparison wise error rate?

1. Comparative error rate. This is the probability of a Type I error (which negates the incredible true value of H0) for yesanalysis. In the case of our five-group design, ten comparison error rates are available, one for each of the ten possible pairs.

One of the reasons for doing ANOVA instead of separate t-tests is to simply reduce the type I error. The only problem is that after you have developed the current ANOVA, if the null hypothesis can be rejected, of course you want to find out which groups have unequal variance, and then you will certainly have to face this main problem.

To get a combined Type I error rate (called the experimental error cycle or family error rate) of 0.05, each person must set each alpha to a positive value, such as 1 – (1 – ±) 3 = 0.05, that is Î ± = first – (1 – 0.05) 1 / 3 = 0.016952. As MentionedIt was in the section “Statistical power”, for the same sample size this reduces the power compared to individual t-tests. Experimental error coefficient, if <. Then 05, the error size is considered conservative. If it should be> 0.05, the error is marked as large.

There are two types of post hoc ANOVA testing: scheduled testing (also called a priori confidence testing) and unplanned testing (also called post hoc testing, or perhaps retrospective testing). Scheduled tests are installed ahead of time, so you can collect data in an unplanned manner while problems arise after data collection. These tests have completely different type I errors. Example:

What does the term Familywise error rate mean?

In statistics, the error per family ratio (FWER) is clearly the probability of making one or more false discoveries or Type I errors when performing multiple hypothesis tests.

Suppose there are 4 coaches and teams. If an alpha of .05 was used for a scheduled precision zero test, fff .ff because 1 and μ 2 are the minimum averages and 4 3 … and all the greatest.

What does the term Familywise error rate mean?

The family margin of error (FWE or FWER) is the probability that at least one incorrect end result will be lost in a series of hypothesis estimates. In other words, it is a chance of making at least one type I error. FWER is also called alpha inflation or sometimes a cumulative type I error.

How is Familywise error rate calculated?

Bonferroni Amendment. Adjust the value that was taken for the significance rating so that:Correction of Sidak. Adjust the included α value to assess significance, for example:Bonferroni-Holm Amendment. This procedure works for the following reason: